Flyway seems more popular than

Liquibase in Java world. Coming back to Java after few years of joy with

Grails and it's much more flexible db migration solution offered by

grails database-migration plugin which has

Liquibase under covers, I certainly felt little limited flying in with Java-Flyway in the very first couple of hours of exploring it.

Liquibase offers more flexibility through a ledger, a change-log XML file in which you define the order of your migration scrips.

Grails database-migration plugin enhances migration scripts typically written in SQL with added DSL Groovy support. Also, the change-log file can be in groovy instead of XML. XML was once hot and is a legacy now (except for Maven, it's still modern).

Grails database-migration plugin offers full power of dealing with database migrations including full support for generating base-level or starting migration script, incremental change scripts, a rollback mechanism etc. The documentation is also top notch.

With

Flyway, you do not have that flexibility dealing with the order or migration scripts through change-log like ledger file. You have to follow version-embedded filename (SQL or Java) conventions. It is highly recommended to follow timestamp based filename versioning. I am yet to explore it's Java way of dealing with complex migrations, but I am sure it is not going to be as pleasing as working with database migrations in Grails projects with expressive nature of Groovy code.

There are tons of articles comparing both

Flyway and

Liquibase. This post is not to compare, but some exploration of

Flyway and JPA capabilities with

Grails database-plugin mindset in a Java-based

Spring Boot project with

JPA.

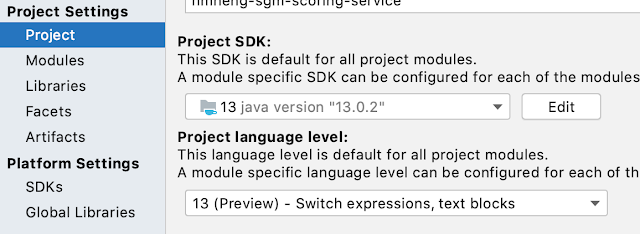

Environment: Java 13, Spring Boot 2.2.4.RELEASE, PostgreSQL 12.1, Maven 3.6.2 on

macOS High Sierra 10.13.6

Generate BASE DDL

It is tempting to start hand-coding Flyway SQL scripts once you make your initial domain model ready with JPA annotations. This is highly error prone and disconnects your domain model powered with JPA from DB in the process of initializing DB with schema and getting it validated against the model. One way to achieve this is to generate DDL scripts from the model.

I prefer to have DDL scripts generated than hand-coding. JPA has this feature and Hibernate offers a decent implementation. This will give you a jumpstart with db migration scripts. You can take generated script by the well-known tool:

copy and paste into Flyway migration script file and polish it further. This way, your model gets verified through the generated script taken into Flyway script and applied to DB. Thus any discrepancies between the model and DB can be avoided later in the game.

In order to get the DDL script generated, you need to make some run-time configuration changes for your local environment (the environment for which you need to get DDL generated). There are three ways to do this (at least the possible ways I've explored).

Option-1: Make changes to your environment

properties/

yml file as shown below:

bootstrap-local.yml

spring:

jpa:

properties:

hibernate:

# generating DDL - add me, Hibernate 5.1.0 onwards the default end of SQL statement delimiter is none in generated DDLs

hbm2ddl.delimiter: ';'

# generating DDL - add me

javax:

persistence:

schema-generation:

scripts:

action: create

create-target: create.sql

flyway:

# generating DDL, make sure I am turned off

enabled: false

Run your app with the above changes, and you will have

create.sql file generated in the directory where you run your app from. Examine and make any necessary changes to the DDL generated before copying that into Flyway Base SQL script.

Revert the changes done to your environment

properties/

yml file and bring up the application. Flyway should be flying happily taking the base DDL script file and applying it to your database.

Option-2: Set those properties on the maven command line (

*fine-print: Due to some reason, this option doesn't work consistently for me, I am not at all happy with Spring Boot Maven Plugin's documentation. You need to depend on extensive and tireless search to find out how to get this done :( )

Alternatively, you can simply override those run-time config properties for your local env in the maven command and get the DDL generated. This way you don't have to temporarily change your local run-time config file every time when you need to generate DDL and revert it afterwards. An example of running maven wrapper command on the root project when you have a spring-boot project (

my-service-api) as one of modules, is shown below:

./mvnw -pl myservice-api clean install spring-boot:run -Dspring-boot.run.profiles=local -DskipTests \

-Dspring-boot.run.arguments=\

--spring.flyway.enabled=false,\

--spring.jpa.properties.javax.persistence.schema-generation.scripts.action=create,\

--spring.jpa.properties.javax.persistence.schema-generation.scripts.create-target=create.sql,\

--spring.jpa.properties.hibernate.hbm2ddl.delimiter=\;

In the above command, we basically have overridden four run-time config properties earlier shown in

yml file for DDL generation:

1) disabled Flyway

2) specified schema-generation type

3) specified the DDL file name to be generated

4) specified the delimiter character, the end of statement character for SQL statements generated in the DDL file.

All backslashes (

\) are just shell line-breakers except the very last one to escape the end of statement delimiter character (

;) in the generated DDL script.

If you are lucky, you will have

create.sql file generated in the directory you ran this command from. Examine the DDL generated before copying it into Flyway Base SQL script.

Simply bring up your application. Flyway should be flying happily taking the base DDL script file and applying it to your database.

Option-3 (My preferred option): Run with your runnable jar

Have a runnable jar created (typically under

target directory in your module). Simply bring up the application by passing all those properties to override on the command line. This way, you can stay away from Maven and from all issues it brings in along with it. An example is shown below:

For action

create:

java --enable-preview -Dspring.profiles.active=local -jar <path/to/your/jar-file/executable/jar-file.jar> \

--spring.flyway.enabled=false \

--spring.jpa.properties.javax.persistence.schema-generation.scripts.action=create \

--spring.jpa.properties.javax.persistence.schema-generation.scripts.create-target=create.sql \

--spring.jpa.properties.hibernate.hbm2ddl.delimiter=\;

For action

update:

java --enable-preview -Dspring.profiles.active=local -jar <path/to/your/jar-file/executable/jar-file.jar> \

--spring.flyway.enabled=false \

--spring.jpa.properties.javax.persistence.schema-generation.scripts.action=update \

--spring.jpa.properties.javax.persistence.schema-generation.scripts.create-target=update.sql \

--spring.jpa.properties.hibernate.hbm2ddl.delimiter=\;

Again, all backslashes (

\) are just shell line-breakers except the very last one to escape the end of statement delimiter character (

;) in the generated DDL script.

If you want to run it from IntelliJ instead of command-line, setup a Run Configuration as shown below:

Incremental DDL changes

Once you have base DDL Flyway script applied, as you progress with your development, there will be changes made to domain model as it starts to evolve. As and when your domain model goes through changes, you need to put corresponding Flyway SQL migration scripts in place.

I'VE NOT FOUND A WAY TO GET THIS DONE!

NOTE: Though I have not found an action like

update authoritatively documented anywhere, I just tried and it does work and generating something but not very useful. All I tried was changing action to

update from

create and

create-target to

update.sql from

create.sql.

If you have your previously generated

create.sql/update.sql file hanging around and use the same for incremental changes, it simply gets appended with the resulted incremental DDL statements. That is definitely not what you want. So, make sure that you delete or use a different name.

Once, you have the incremental DDL script, examine it, and copy it to new Flyway script file. Bring up the app to have Flyway flying again taking the newly added script with it and applying it to the Database.

Leverage JPA Annotations as much as you can in order to generate your DDL accurately

A good Database schema design should have all data constraints applied. These constraints include primary key constraints, foreign key constraints, unique constrains etc. JPA offers annotations that can be leveraged in generating constraint creation DDL commands as well.

PRIMARY KEY Constraint

public class MyDomain {

@Id

@GeneratedValue(strategy = GenerationType.IDENTITY)

@Column(nullable = false, updatable = false)

private Long id;

...

}

The above JPA annotation generates the following DDL script:

CREATE TABLE my_domain {

id SERIAL PRIMARY KEY,

...

}

When the type is

SERIAL PostgreSQL generates a table specific sequence

my_domain_seq and with IDENTITY generation strategy, this sequence is used both by database and JPA.

UNIQUE KEY Constraint

@Table(

uniqueConstraints = @UniqueConstraint(

columnNames = {"prop1", "prop2"}

name = "my_domain_p1_p2_uk"

)

)

public class MyDomain {

...

String prop1;

String prop2;

}

The above JPA annotation generates the following DDL script:

ALTER TABLE my_domain ADD CONSTRAINT my_domain_p1_p2_uk UNIQUE (prop1, prop2);

FOREIGN KEY Constraint

public class MyDomain {

...

@ManyToOne(fetch = FetchType.EAGER, optional = false)

@JoinColumn(

name = "my_prop_type_id"

foreignKey = @ForeignKey(name = "my_domain_mpt_fk"),

nullable = false, insertable = flase, updatable = false

)

private MyPropType myPropType;

...

}

The above JPA annotation generates the following DDL script:

ALTER TABLE my_domain ADD CONSTRAINT my_domain_mpt_fk FOREIGN KEY (my_prop_type_id) REFERENCES my_prop_type;

TIPS

Get that missing Semicolon back

Without explicitly setting the property

spring.jpa.properties.hibernate.hbm2ddl.delimiter=; the generated DDL statements will not end with semi-colon. If you set it on the command line instead of the env specific application

yml/

properties file, make sure to escape

; with

\ as shown below:

spring.jpa.properties.hibernate.hbm2ddl.delimiter=\;

Turn Flyway on/off

Flyway can be turned on/off by setting the property

spring.flyway.enabled=true/false. It can either be set in application

yml/

properties files or on the command line when

mvn/

mvnw is run. I am not happy with overriding on the maven command line is as it takes up my time with stupid errors that I do not want to break my head with anymore, use this option at your own discretion :)

Happy Coding!

Have a limited but safe flight with Flyway and Maven in a Spring Boot application!!